CISL Collaborators Professor Jonas Braasch and Samuel Chabot will present “An Immersive Virtual Environment for Congruent Audio-Visual Spatialized Data Sonifications” at the 23rd International Conference of Auditory Display at Penn State.

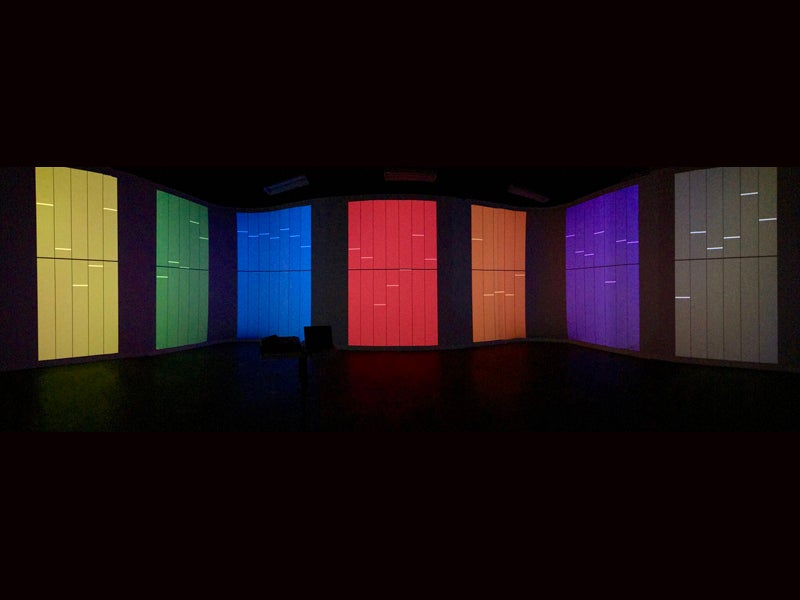

ABSTRACT — The use of spatialization techniques in data sonification provides system designers with an additional tool for conveying information to users. Oftentimes, spatialized data sets are meant to be experienced by a single or few users at a time. Projects at Rensselaers Collaborative-Research Augmented Immersive Virtual Environment Laboratory allow even large groups of collaborators to work within a shared virtual environment system. The lab pro- vides an equal emphasis on the visual and audio system, with a nearly 360 panoramic display and 128-loudspeaker array housed behind the acoustically-transparent screen. The space allows for dynamic switching between immersions in recreations of physical scenes and presentations of abstract or symbolic data. Content creation for the space is not a complex process-the entire display is essentially a single desktop and straight-forward tools such as the Virtual Microphone Control allow for dynamic real-time spatialization. With the ability to target individual channels in the array, audio-visual congruency is achieved. The loudspeaker array creates a high-spatial density soundfield within which users are able to freely explore due to the virtual elimination of a so-called “sweet-spot.”

Spatialized sound reproduction for telematic music performances in an immersive virtual environment, Samuel Chabot, J. Acoust. Soc. Am. 140, 3292 (2016)